Updated: July 2021

This article will demonstrate how to download CSV (comma-separated values) and Excel data from Java web applications using Data Pipeline. It will also show one way to plug Data Pipeline into your JSPs.

Data Pipeline is often used in non-GUI applications to move data from one place/format to another. However, Data Pipeline can just as easily be plugged into your web, mobile, and desktop applications. Being as lightweight and Java-centric as it is, it is perfect for handling your data conversion and manipulation use-cases.

Overview

Here is a simple, read-only web app to display a list of events (conferences, meetings, appointments, etc.). The app’s functionality can be divided into 3 groups:

- Create and populate the database

- Read and transform data

- Export/render data

The overall design is based on the Model–View–Controller (MVC) architecture. Data Pipeline’s Record and RecordList classes will constitute the model. A single JSP will present the view. A RESTful resource (using JBoss’ RESTEasy framework) will be the controller.

The full source and configuration are included with the download for this blog.

1. Create and populate the database

The first step is to set up a database and fill it with test data. To save time, we use a HyperSQL, in-memory database with no persistence to disk.

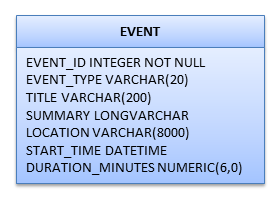

The data model is minimal, just a single event table that we create and populate each time the app is loaded.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

static { // Create EVENT table db.executeUpdate("CREATE TABLE EVENT (" + " EVENT_ID INTEGER GENERATED BY DEFAULT AS IDENTITY (START WITH 1, INCREMENT BY 1) not null, " + " EVENT_TYPE VARCHAR(20)," + " TITLE VARCHAR(200)," + " SUMMARY LONGVARCHAR," + " LOCATION VARCHAR(8000)," + " START_TIME DATETIME," + " DURATION_MINUTES NUMERIC(6,0)" + ")"); // Populate EVENT table with data for (int i = 0; i < 10; i++) { db.executeUpdate("INSERT INTO EVENT(EVENT_TYPE, TITLE, SUMMARY, LOCATION, START_TIME, DURATION_MINUTES) " + " VALUES('Business', 'Title "+i+"', 'Summary "+i+"', 'Location "+i+"', '2012-12-03 8:45:00', 135)"); } } |

2. Read and transform events

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

private DataReader getEvents() { DataReader reader = new JdbcReader(db.getConnection(), "SELECT * FROM EVENT"); reader = new TransformingReader(reader) .add(new CopyField("DURATION_MINUTES", "DURATION", true)) .add(new BasicFieldTransformer("DURATION").numberToMinutes()) ; reader = new TransformingReader(reader) .add(new SetCalculatedField("END_TIME", "START_TIME + DURATION")) ; reader = new TransformingReader(reader) .add(new CopyField("START_TIME", "START", true)) .add(new BasicFieldTransformer("START").dateTimeToString("EE MMM d, yyyy 'at' h:mm a")) ; reader = new TransformingReader(reader) .add(new CopyField("END_TIME", "END", true)) .add(new BasicFieldTransformer("END").dateTimeToString("h:mm a")) ; return reader; } |

Next, we read the events data using getEvents(). This method will be used internally by the data exporters and JSP render. Its job is to retrieve a stream of records from the database and enrich it with new fields. The retrieval step is done by instantiating a JdbcReader and passing it a database connection and a SQL query. The JdbcReader is then wrapped by several TransformingReaders that do the following:

- Copy the existing DURATION_MINUTES field to a new field (DURATION), then convert the new field from a number to an interval (as minutes). Data Pipeline intervals, like Oracle intervals, hold a quantity of time that can be used in datetime expressions.

- Create a new calculated field (END_TIME) by adding START_TIME and DURATION

- Copy START_TIME to START then convert it from a datetime to a formatted string

- copy END_TIME to END then convert it from a datetime to a formatted string

This demo uses several transformers for clarity, but the add(Transformer...) calls could just have easily been made on a single TransformingReader instance.

3. Export CSV

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

@GET @Path("/events.csv") public Response getEventsAsCsv(@Context HttpServletResponse response) throws Throwable { DataReader reader = getEvents(); response.setContentType("text/csv"); response.setHeader("Content-Disposition", "attachment; filename=\"events.csv\""); PrintWriter printWriter = response.getWriter(); DataWriter writer = new CSVWriter(printWriter).setFieldNamesInFirstRow(true); JobTemplate.DEFAULT.transfer(reader, writer); return Response.ok().build(); } |

Next we serve up the first file using getEventsAsCsv(). This method takes the record stream from getEvents() and converts it to a CSV text stream on-the-fly. getEventsAsCsv() can be used to return huge datasets since the CSVWriter is writing records directly to the HTTP response stream without any buffering or batching.

4. Export Excel

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

@GET @Path("/events.excel") public Response getEventsAsExcel(@Context HttpServletResponse response) throws Throwable { DataReader reader = getEvents(); ExcelDocument excelDocument = new ExcelDocument(); DataWriter writer = new ExcelWriter(excelDocument).setFieldNamesInFirstRow(true); JobTemplate.DEFAULT.transfer(reader, writer); response.setContentType("application/vnd.ms-excel"); response.setHeader("Content-Disposition", "attachment; filename=\"events.xls\""); OutputStream outputStream = response.getOutputStream(); excelDocument.save(outputStream); return Response.ok().build(); } |

The second file is served up using getEventsAsExcel(). This method takes the record stream from getEvents() and converts it to an in-memory Excel document before sending the entire thing to the HTTP response stream. Unlike getEventsAsCsv(), this method has to allocate memory to buffer all of the Excel document’s records.

5. Render JSP

The final step is to present the following data grid and download links for the CSV and Excel files. For simplicity’s sake, we’ll display all records in the table and omit any paging, sorting, or filtering functions.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

@GET @Path("/events") public void getEventsAsHtml( @Context HttpServletRequest request, @Context HttpServletResponse response) throws Throwable { DataReader reader = getEvents(); MemoryWriter writer = new MemoryWriter(); JobTemplate.DEFAULT.transfer(reader, writer); RecordList recordList = writer.getRecordList(); request.setAttribute("recordList", recordList); request.getRequestDispatcher("/WEB-INF/jsp/events.jsp").forward(request, response); } |

Like previously, getEventsAsHtml() reads the record stream from getEvents(), but now collects them into a RecordList. The collected records are then passed onto the JSP for rendering.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

<%@ page language="java" contentType="text/html; charset=UTF-8" pageEncoding="UTF-8"%> <%@ page import="com.northconcepts.datapipeline.core.Record" %> <%@ page import="com.northconcepts.datapipeline.core.RecordList" %> <!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd"> <html> <head> <meta http-equiv="Content-Type" content="text/html; charset=UTF-8"> <title>Events</title> </head> <body> Export as <a href="/events1/events.csv">CSV</a> | <a href="/events1/events.excel">Excel</a> <% RecordList recordList = (RecordList)request.getAttribute("recordList"); %> <table cellpadding="4" cellspacing="0" border="1"> <thead> <tr> <% for(String fieldName : recordList.get(0).getFieldNames()) { %> <td><%=fieldName%></td> <% } %> </tr> </thead> <tbody> <% for(int row=0; row<recordList.getRecordCount(); row++) { %> <tr> <% Record record = recordList.get(row); for(int col=0; col<record.getFieldCount(); col++) { %> <td><%=record.getField(col).getValue()%></td> <% } %> </tr> <% } %> </tbody> </table> </body> </html> |

The JSP renders the 2 download links followed by a data grid. Since the grid is built dynamically, it should handle most tables (as long as there’s data and any HTML in the fields are escaped).

Running the example

That’s it. To try the example app for yourself:

- Download the project.

- Download the latest Data Pipeline and unpack it into the project’s WEB-INF\lib folder.

- Run the ant build script.

- Deploy the generated war to your server of choice.

- Visit http://localhost:8080/events1/ (adjust the port as needed).

Happy coding!

Hi ,

I need to export data to excel from html (excel content type) which has more than 90k rows.

this leads to memory and server crash issue.

Can this product help me to streamline this process

thanks,

nandha

Pingback: North Concepts

Pingback: Export CSV and Excel from Java web apps With Data Pipeline | Dinesh Ram Kali.

Pingback: Import CSV and Excel to Java Web Apps with Data Pipeline

Pingback: How to create multiple sheets in a single Excel file

Pingback: Uma Proposta de Design para Composição e Geração de Arquivos CSV | Atitude Reflexiva