Updated: May 2023

ETL is a process for performing data extraction, transformation and loading. The process extracts data from a variety of sources and formats, transforms it into a standard structure, and loads it into a database, file, web service, or other system for analysis, visualization, machine learning, etc.

ETL tools come in a wide variety of shapes. Some run on your desktop or on-premises servers, while others run as SaaS in the cloud. Some are code-based, built on standard programming languages that many developers already know. Others are built on a custom DSL (domain specific language) in an attempt to be more intentional and require less code. Others still are completely graphical, only offering programming interfaces for complex transformations.

What follows is a list of ETL tools for developers already familiar with Java and the JVM (Java Virtual Machine) to clean, validate, filter, and prepare your data for use.

1. Data Pipeline

Data Pipeline is our own tool. It’s an ETL framework you plug into your software to load, processing, and migrate data on the JVM.

It uses a single API, modeled after the Java I/O classes, to handle data in a variety of formats and structures. It’s single-piece-flow approach to data allows it to handle huge amounts of data with a minimum overhead while still being able to scale using multi-threading. This approach also allows it to process both batch and streaming data through the same pipelines.

Data Pipeline comes in a range of versions including a free Express edition.

2. Scriptella ETL

Scriptella is an open source ETL tool written in Java.

The tool has been designed with simplicity in mind. Despite its humble feature set, it allows for data transformations without XML and provides the ability to execute scripts in a series of different languages including SQL, JavaScript, Velocity and JEXL.

3. Cascading

Cascading is a Java API for data processing.

The API gives you a wide range of capabilities for solving business problems. Examples include sorting, averaging, filtering and merging. With Cascading you don’t have to install anything; all dependencies are managed through Maven. It follows separations of concerns design principle out of the box. Business logic can be written separately from integration logic by using Pipes and Taps abstractions.

Cascading supports reading and writing from a wide range of external sources. While you can build your own schemes and taps, you are also provided with pre-built taps and schemes.

4. Apache Oozie

Apachie Oozie is a Java web application used for scheduling Apache Hadoop jobs.

It is a reliable and scalable tool which forms a single logical unit by sequentially combining multiple jobs. It comes with built in support for various Hadoop jobs such as Java MapReduce, Streaming MapReduce, Pig, Hive, Sqoop and Distcp.

Apache Oozie also supports job scheduling for specific systems such as shell scripts and java programs.

5. ETLWorks (Formerly Toolsverse)

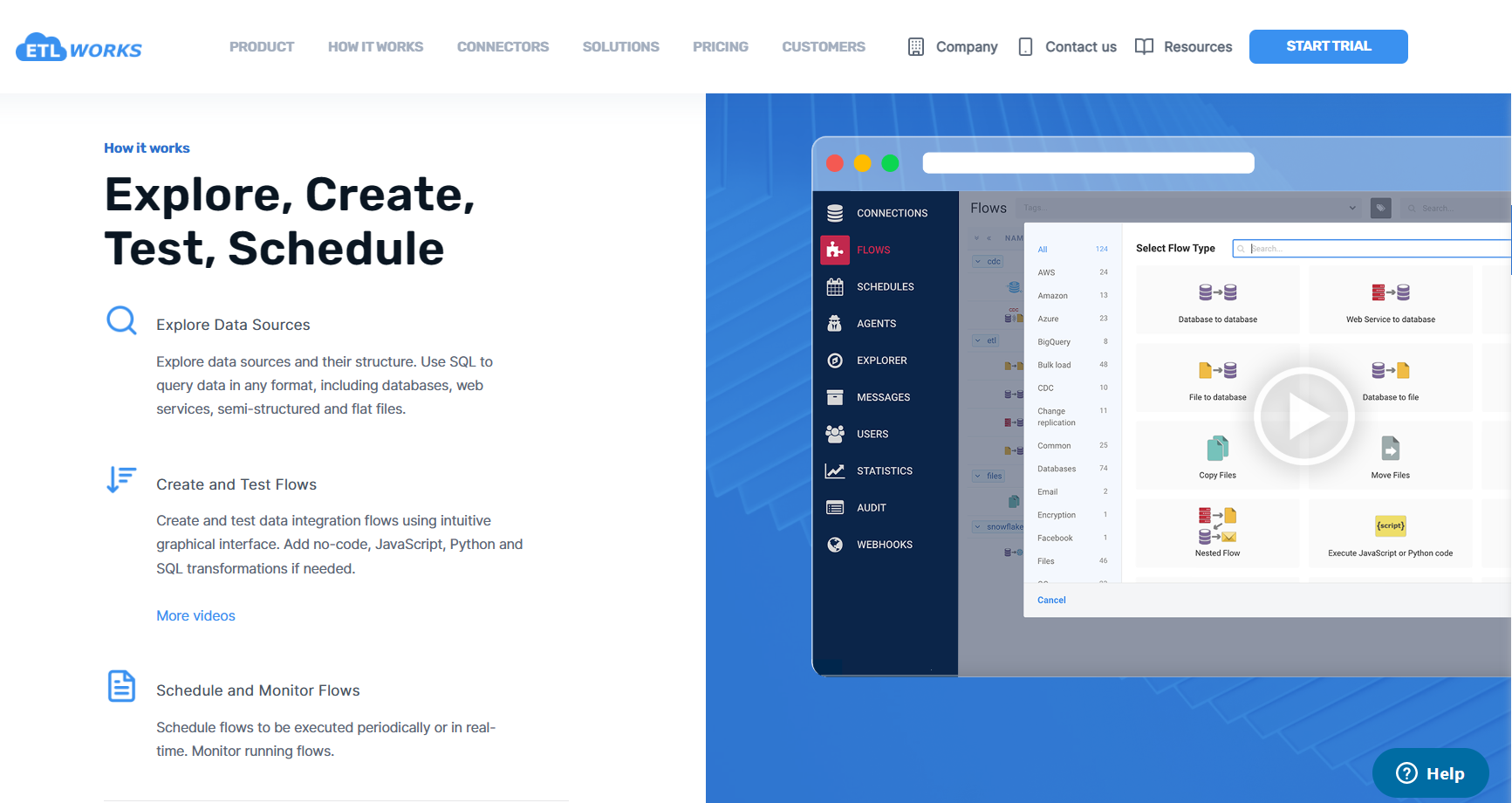

ETLWorks (formerly Toolsverse) is a commercial ETL tool with a 14-day free trial.

The extract-transform-load engine comes with executables for major platforms and supports integration into other applications. A library of built-in transformations helps you come up to speed with little effort. ETLWorks framework can be embedded into a Java application or it can also be deployed as a web application. ETLWorks also offers cloud-based Rest APIs. In addition, you can also build your own transformations and connectors using Javascript, Java and SQL.

6. GETL

GETL is a free ETL engine based on a series of libraries.

These libraries are used for unpacking, transforming and deploying data into programs written in Java, Groovy and other similar languages based on Java classes and objects.

GETL provides for an automated data loading and transformation process. In addition, its classes have a simple hierarchy which make for easy connections with other solutions.

7. CloverDX

CloverDX is a commercial data management platform with a 45-day free trial.

It supports data integration from various data sources and formats such as CSV, Excel and JDBC. Its graphical interface makes it easier for non-developers to perform data transformation tasks and provides an intuitive analysis of data connections between source and applications. It can be used for basic tasks as well as for complex data intensive jobs.

8. Oracle Data Integrator

Oracle Data Integrator is a data integration tool.

It supports requirements such as high-volume, high performance batch loads and SOA-enabled data services. It also supports data movement and data transformation of large data volumes. The user interface of the tool is flow based thus providing for an intuitive integration process.

It is a free tool. An enterprise edition is also available.

9. Smooks

Smooks is an open source Java based extensible ETL engine built on top of DOM and SAX that works with structured data.

It supports the transformation of multiple and different data sources such as XML, CSV, EDI, JSON and to multiple and different formats such as XML, CSV, EDI, and JSON. With Smooks you can use transformation on just a portion of the data source or on the entire data source. It gives you the option of writing and using your own custom logic event handlers or you can choose to use a wide array of solutions that is shipped with the Smooks distribution.

With Smooks you can bind data sources such as XML, CSV, EDI, and JSON into a Java Object Model. It supports processing of Gigabytes of messages. It does with the help of a Filter made in SAX. The stream of messages can be split, transformed and routed to different data sources and databases. Multiple data sources and databases can be used to feed these messages.

10. Spring Batch

Spring Batch is a lightweight scalable batch processing open source tool.

Based on the POJO development approach of the Spring framework, it is designed with the purpose of enabling developers to make batch rich applications for vital business operations. Spring Batch comes with reusable functions such as tracing, transaction management, statistics, stop, start, restart, skip job and resource management which can be used when processing data. It supports a wide array of data input and output sources such as File, JDBC, NoSQL and JMS to name a few.

Some of the advanced features of Spring Batch such as optimization and partitioning methods can be leveraged for high volume and high-performance batch jobs.

11. Easy Batch

Easy Batch is a lightweight Java framework built with the purpose of removing complexity in batch processing.

Easy Batch lets you can focus on logic while leaving the tasks of reading, writing, filtering, parsing and validating data to the tool. It allows you to define data validation constraints on your domain object and takes care of the code itself. Development in Easy Batch is POJO based. This ensures the object oriented aspect of Java which enables you to map data to your domain objects.

Processing time in Easy Batch is reduced by enabling you to run multiple jobs in parallel. Job progress and execution can be monitored in real time using JMX. One of its salient features is that it has a very small memory footprint and Easy Batch has no dependencies.

Easy Batch can be ran in two ways. It can either be embedded into an application server or it can run as a standalone application.

12. Apache Camel

Apache Camel is an open source integration framework in Java that can be used to exchange, transform and route data among applications with different protocols.

It is a lightweight rule based routing and mediation engine that provides Java object based Enterprise Integration Patterns (EIP) by using either a Java API or a domain specific language. With Apache Camel, you have the ability to make your routing rules, determine the sources from which to accept messages and decide on how to process and send those messages to other components of the application. In order to make use of transports, different messaging frameworks such as HTTP, JMS and CXF URIs are used.

Apache Camel can be distributed in a web container or as a standalone application.

13. Apache NiFi

Apache Nifi is an open source stream processing tool. It allows for data to be published as well as subscribed from a range of streaming sources. The streamed data can then be made to go through a series of processing options aimed at inferring and extracting information from the data.

Like its competition, Nifi provides the ability to interact with clusters, implement distributed processing, secure data communication over SSL, ensure minimum response time and offer fail-safe reliability. To hide the complexity of stream processing tasks, Nifi provides a web-based graphical user interface which automates the configuration work required for processing streams. A sample GUI in action can be found here:

Summing up, Nifi allows Spark to complement its batch processing capability by offering a versatile, open source stream processing framework.

14. Jaspersoft

Jaspersoft is an ETL tool that is commonly used for creating data warehouses from transactional data.

The tool allows for a combination of relational and non-relational data sources. It also includes a business modeler for a non-technical view of the information workflow and a job designer for displaying and editing ETL steps. A debugger also exists for real-time debugging.

Other features include multiple output sources such as XML, databases, web services and native connectivity to ERP, CRM and Salesforce applications.

15. Apatar

Apatar is an open source ETL tool based on Java.

Its feature set include single-interface project integration, visual job designer for non-developers, bi-directional integration, platform independence and the ability to work with a wide range of applications and data sources such as Oracle, MS SQL and JDBC. These features not only make it a rival to competing commercial solutions but also make the ETL highly extensible.

16. Apache Spark

Apache Spark provides stream as well as batch processing.

This library acts as a fault tolerant framework that can process real time data at high throughputs. Data is dealt in streams and can be read from a wide variety of sources including Kafka, Twitter and ZeroMQ. In addition, custom streaming sources can also be defined for wider data coverage.

The Spark engine processes data by dividing it into a series of successive batches, with each batch corresponding to a different processing stage. This processing makes use of several different algorithms including MapReduce, movable window, machine learning and graph processing. The end results can be presented in a series of visualizations involving graphics and dashboards.

Spark programs can be written in Java, Scala and Python. This provides you with a range of convenience for integration with existing software frameworks. Due its flexibility, robustness, scalability and easy adoption, Spark is being used by a number of industry giants such as Uber, Pinterest and Netflix.

17. Bender

Bender is another open source Java based framework that can build ETL modules for Amazon’s AWS Lambda.

Bender comes with built-in support for reading, writing, filtering and manipulating data from Amazon Kinesis Streams and Amazon S3. Developers can build their input handlers, deserializers, operations, wrappers, serializers, transporters and reporters. Extensive support and documentation is available for Bender. It has over 184 stars on its GitHub repository.

18. Talend Open Studio for Data Integration

Talend Open Studio For Data Integration is an open source tool that offers a wide range of data integration solutions.

Its graphical user interface allows for a drag-and-drop feature set which lets non-programmers execute complex integration tasks. Its support for connecting a large volume of application connectors help in combining with databases, mainframes and web services. Other features include the ability to manipulate strings, automatic lookup handling and management of changing dimensions.

Which Java ETL tool do you use?

Have we left off your favorite Java ETL tool? Please add a comment letting us know about it.

Happy Coding!